How to Internationalizing your Flutter apps ?

First Reading:

Steps:

1. add flutter_localizations dependencies into pubspec.yaml

2. add localizationsDelegates and supportedLocales into MaterialApp

3. Defining your own class for the app's localized resources

4. Use AppLocalizations

Demo:

How to measure the maximum throughput (TPS - Transactions per Second) that a single website instance(host) can handle via curl tool ?

tps.py, a python script using curl to measure the time per transaction requested to your website

curl --connect-timeout 10 --max-time 10 -o /dev/null -s -w "%{http_code},%{size_download},%{time_appconnect},%{time_connect},%{time_namelookup},%{time_pretransfer},%{time_starttransfer},%{time_total}" -k#-*- coding: UTF-8 -*-

import commands

import getopt

import os

import re

import sys

import thread

import threading

import time

def help(code):

print 'tps.py -A "useragent" -C "cookies" -H "header" -P "period" -T "tps" -U "url" -O "outputdir"'

print 'examples:'

print 'tps.py -U https://www.amazon.com -T 20 -P PT5M'

print 'tps.py -U https://www.amazon.com -T 20 -P PT1H'

sys.exit(code)

def check_args(a, c, h, p, t, u, o):

if u == '':

print 'no URL specified!'

help(2)

if t == '':

print 'no TPS specified!'

help(2)

if p == '':

print 'no PERIOD specified!'

help(2)

cmd = 'curl --connect-timeout 10 --max-time 10 -o /dev/null -s -w "%{http_code},%{size_download},%{time_appconnect},%{time_connect},%{time_namelookup},%{time_pretransfer},%{time_starttransfer},%{time_total}" -k'

if not a == '':

cmd = cmd + " -A \"" + a + "\""

if not c == '':

cmd = cmd + " -b \"" + c + "\""

if not h == '':

cmd = cmd + " -H \"" + h + "\""

cmd = cmd + " " + u

fp = os.getcwd() + '/tps.csv'

if not o == '':

fp = o + "/tps.csv"

tpspt(cmd, t, p, fp)

def tpspt(cmd, t, p, fp):

f = open(fp, 'w+')

if not f:

print "fail to create tps.csv under ", os.getcwd()

sys.exit(1)

m = re.match('^PT(\d+)[MH]$', p)

if not m:

print ' wrong PERIOD pattern!', p , 'not match "PT\d+[MH]"'

help(2)

m = int(m.group(1))

if p[-1] == 'H':

m = m * 60 * 60

else:

m = m * 60

t = float(t)

print "-tps", t, "-period", p, ",", int(m * t), "transactions will be executed!"

print cmd

start = time.time()

wq = []

ths = []

maxm = m

if t < 1:

maxm = int(m*t)

for x in range(0, maxm):

if t < 1:

thargs = [cmd, t, wq, int(x/t) + 1]

th = threading.Timer(int(x/t) + 1, tps, thargs)

else:

thargs = [cmd, t, wq, x + 1]

th = threading.Timer(x + 1, tps, thargs)

th.start()

ths.append(th)

for th in ths:

th.join()

end = time.time()

print "Finish ", int(m * t), "transactions in", int(end - start), "seconds!"

print "Writing results to tps.csv ......"

f.write("id,http_code,size_download,time_appconnect,time_connect,time_namelookup,time_pretransfer,time_starttransfer,time_total")

f.write('\n')

for line in wq:

f.write(line)

f.close()

print "Done! Please check results in", fp

def tps(cmd, t, wq, x):

print "execute", t, "transactions at the ", x, "seconds"

while t > 0:

starttime = time.strftime('%Y-%m-%d %H:%M:%S',time.localtime(time.time()))

status, output = commands.getstatusoutput(cmd)

# sample output

# http_code,size_download,time_appconnect,time_connect,time_namelookup,time_pretransfer,time_starttransfer,time_total

# 200,79059,0.011196,0.000273,0.000214,0.011224,1.091032,5.055447

cells = output.split(",")

if len(cells) < 8:

print "ERROR!!!", output

continue;

cell0 = cells[0]

if not cell0 == '200':

print "WARNING!!!", cell0, "is not wanted response code!!!"

cell1 = cells[1]

cells = map(lambda x: float(x) * 1000, cells[2:])

cells.insert(0, float(cell1)/1024)

cells.insert(0, int(cell0))

cells = map(lambda x: str(x), cells)

cells.insert(0, starttime)

wq.append(','.join(cells))

wq.append('\n')

t = t - 1;

def main(argv):

useragent = ''

cookie = ''

header = ''

period = ''

tps = ''

url = ''

outputdir = ''

try:

opts, args = getopt.getopt(argv,"hA:C:H:O:P:T:U:",["useragent=","cookie=", "header=", "outputdir=", "period=", "tps=", "url="])

except getopt.GetoptError:

help(1)

for opt, arg in opts:

if opt == '-h':

help(0)

elif opt in ("-A", "--useragent"):

useragent = arg

elif opt in ("-C", "--cookie"):

cookie = arg

elif opt in ("-H", "--header"):

header = arg

elif opt in ("-P", "--period"):

period = arg

elif opt in ("-T", "--tps"):

tps = arg

elif opt in ("-U", "--url"):

url = arg

elif opt in ("-O", "--outputdir"):

outputdir = arg

check_args(useragent, cookie, header, period, tps, url, outputdir)

if __name__ == "__main__":

main(sys.argv[1:])

example usage:

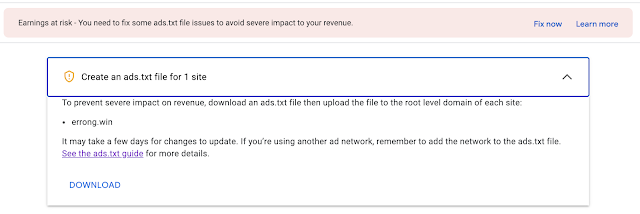

./tps.py -T 10 -P "PT3M" -U https://errong.win

-tps 10.0 -period PT3M , 1800 transactions will be executed!

curl --connect-timeout 10 --max-time 10 -o /dev/null -s -w "%{http_code},%{size_download},%{time_appconnect},%{time_connect},%{time_namelookup},%{time_pretransfer},%{time_starttransfer},%{time_total}" -k https://errong.win

.......

Finish 1800 transactions in 184 seconds!

Writing results to tps.csv ......

Done! Please check results in tps.csv

TPS Graph

As you can found, errong.win average responding time is about 420ms, not too bad.

curl -w manual

Make curl display information on stdout after a completed transfer. The format is a string that may contain plain text mixed with any number of variables. The format can be specified as a literal "string", or you can have curl read the format from a file with "@filename" and to tell curl to read the format from stdin you write "@-".The variables present in the output format will be substituted by the value or text that curl thinks fit, as described below. All variables are specified as %{variable_name} and to output a normal % you just write them as %%. You can output a newline by using \n, a carriage return with \r and a tab space with \t.

NOTE: The %-symbol is a special symbol in the win32-environment, where all occurrences of % must be doubled when using this option.

The variables available are:

- content_type

The Content-Type of the requested document, if there was any. - filename_effective

The ultimate filename that curl writes out to. This is only meaningful if curl is told to write to a file with the -O, --remote-name or -o, --output option. It's most useful in combination with the -J, --remote-header-name option. - ftp_entry_path

The initial path curl ended up in when logging on to the remote FTP server. - http_code

The numerical response code that was found in the last retrieved HTTP(S) or FTP(s) transfer. - http_connect

The numerical code that was found in the last response (from a proxy) to a curl CONNECT request. (Added in 7.12.4) - http_version

The http version that was effectively used. - local_ip

The IP address of the local end of the most recently done connection - can be either IPv4 or IPv6 (Added in 7.29.0) - local_port

The local port number of the most recently done connection (Added in 7.29.0) - num_connects

Number of new connects made in the recent transfer. (Added in 7.12.3) - num_redirects

Number of redirects that were followed in the request. (Added in 7.12.3) - proxy_ssl_verify_result

The result of the HTTPS proxy's SSL peer certificate verification that was requested. 0 means the verification was successful. (Added in 7.52.0) - redirect_url

When an HTTP request was made without -L to follow redirects, this variable will show the actual URL a redirect would take you to. (Added in 7.18.2) - remote_ip

The remote IP address of the most recently done connection - can be either IPv4 or IPv6 (Added in 7.29.0) - remote_port

The remote port number of the most recently done connection (Added in 7.29.0) - scheme

The URL scheme (sometimes called protocol) that was effectively used (Added in 7.52.0) - size_download

The total amount of bytes that were downloaded. - size_header

The total amount of bytes of the downloaded headers. - size_request

The total amount of bytes that were sent in the HTTP request. - size_upload

The total amount of bytes that were uploaded. - speed_download

The average download speed that curl measured for the complete download. Bytes per second. - speed_upload

The average upload speed that curl measured for the complete upload. Bytes per second. - ssl_verify_result

The result of the SSL peer certificate verification that was requested. 0 means the verification was successful. (Added in 7.19.0) - time_appconnect

The time, in seconds, it took from the start until the SSL/SSH/etc connect/handshake to the remote host was completed. (Added in 7.19.0) - time_connect

The time, in seconds, it took from the start until the TCP connect to the remote host (or proxy) was completed. - time_namelookup

The time, in seconds, it took from the start until the name resolving was completed. - time_pretransfer

The time, in seconds, it took from the start until the file transfer was just about to begin. This includes all pre-transfer commands and negotiations that are specific to the particular protocol(s) involved. - time_redirect

The time, in seconds, it took for all redirection steps including name lookup, connect, pretransfer and transfer before the final transaction was started. time_redirect shows the complete execution time for multiple redirections. (Added in 7.12.3) - time_starttransfer

The time, in seconds, it took from the start until the first byte was just about to be transferred. This includes time_pretransfer and also the time the server needed to calculate the result. - time_total

The total time, in seconds, that the full operation lasted. - url_effective

The URL that was fetched last. This is most meaningful if you've told curl to follow location

Python : How to run a function "foo" per second and last a few minutes or hours

Scenario

I tried to run a function "foo" every second. I have to do this for a few minutes (say 5).

The function foo () sends 100 HTTP requests (including JSON objects) to the server and prints the JSON response.

In short, I have to issue 100 HTTP requests per second for 5 minutes.

Using threading.Timer

#!/usr/bin/python import time import thread import threading def foo(): print time.strftime('%Y-%m-%d %H:%M:%S',time.localtime(time.time())), threading.active_count() #5 minutes = 5 * 60 = 300 seconds for x in range(0, 300): t = threading.Timer(x + 1, foo) t.start() Expected output

function foo was executed per second and last about 5 minutes

2020-05-14 00:54:49 301 2020-05-14 00:54:50 300 2020-05-14 00:54:51 299 2020-05-14 00:54:52 298 2020-05-14 00:54:53 297 2020-05-14 00:54:54 296 2020-05-14 00:54:55 295 ....... 2020-05-14 00:59:44 6 2020-05-14 00:59:45 5 2020-05-14 00:59:46 4 2020-05-14 00:59:47 3 2020-05-14 00:59:48 2 How to kill a bunch of processes which executed with almost same command ?

Scenario

Sometimes we need to manually terminate the process. If not killing too many processes, it is easy to find the pid and kill it. But what if there are a bunch of processes that need to be terminated? Today I encountered such a problem, started a lot of python process, but the result can not stop.

gkill Solution

add below function to your bash profile, then source the profile

function gkill() { ps -ef | grep ${1} | grep -v grep | awk '{print $2}' | xargs --no-run-if-empty kill -9 } Then you can kill all processes that matched your serach pattern via gkill command.

gkill <search content> Extended knowledge

The commands related to the termination process are:

- ps: report a snapshot of the current process

- kill: send a signal to a process

- killall: kill process by name

- pkill: View or issue process signals based on name and other attributes

- skill: send a signal or report the process status

- xkill: destroy a client program according to X resources

- xargs --no-run-if-empty, very useful if args from pipe are empty

pgrep

pgrep is a tool to query the process by the name of the program, generally used to determine whether the program is running. This tool is often used in server configuration and management. Usage: pgrep parameter option program name.

grep

grep (global search regular expression (RE) and print out the line, comprehensive search regular expression and print out the line) is a powerful text search tool, it can use regular expressions to search for text, and print the matching line come out. Unix's grep family includes grep, egrep, and fgrep.

In simple terms, pgrep is to query the running status of the program, and grep is to search for content.

Recommend GC log analyzer tool : GCPlot & GCeasy

GCPlot

Install

Docker Installation

You can run GCPlot in a Docker container.

In order to run GCPlot as-is without additional configuration, run next command:

docker run -d -p 8080:80 gcplot/gcplot

After that eventually the platform will be accessible from your host machine at http://127.0.0.1:8080 address.

Upload GC log

JVM arguments

-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -XX:+PrintTenuringDistribution -XX:+PrintGCCause -XX:+PrintHeapAtGC -XX:+PrintAdaptiveSizePolicy -XX:+UnlockDiagnosticVMOptions -XX:+G1SummarizeRSetStats -XX:G1SummarizeRSetStatsPeriod=1 -Xloggc:/var/output/logs/garbagecollector.log -XX:+UseG1GC Analysis Groups

Universal GC Log Analyzer

https://gceasy.io/

It is more powerful than GCPlot, but you have to upload you GC logs to their backend and pay to see the analysis report.

GCPlot is free and open source

@qzl/typed-i18n — Our Open-Source Typed i18n Project is Gaining Traction

Hello everyone! 👋 We are excited to share an update about one of our open-source projects , @qzl/typed-i18n , created by the QZ-L.com team...

-

Issue you might see below error while trying to run embedded-redis for your testing on your macOS after you upgrade to Sonoma. java.la...

-

Introduction In distributed systems, maintaining context across microservices is crucial for effective logging and tracing. The Mapped Di...

-

If you do not config mail up for your ghost blog, it maybe a big problem once you lost your user password. however, methods are always mor...